A Well Kept Secret

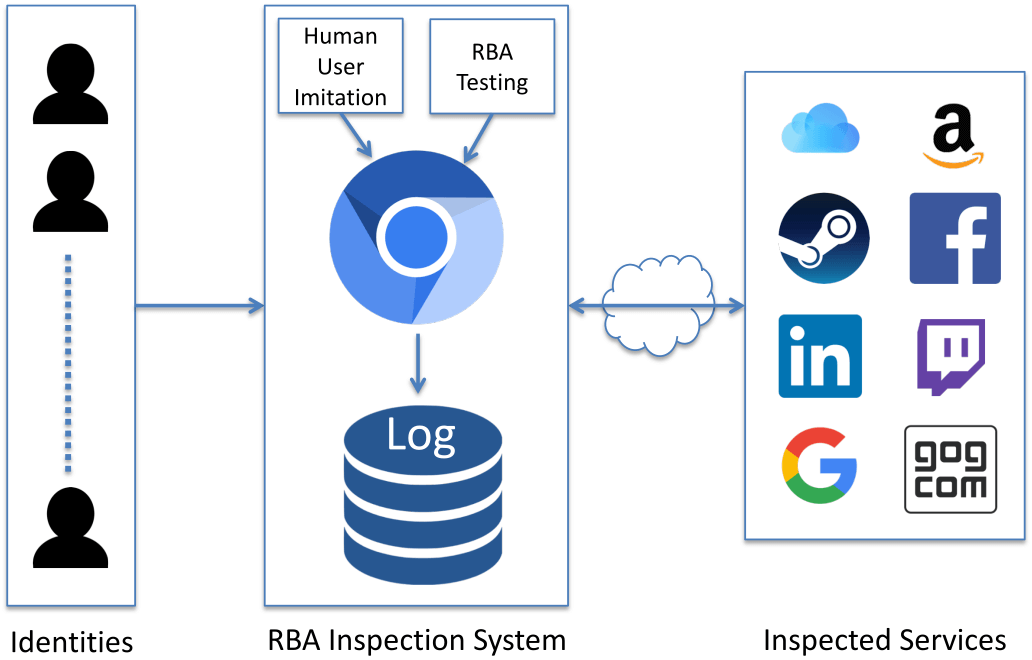

Despite its major importance, online services kept their RBA usage a secret. For this reason, we black box tested eight popular online services in 2018 to find out:

- Are they using RBA?

And if yes:

- How do they calculate the risk score?

- What additional authentication factors are they offering?

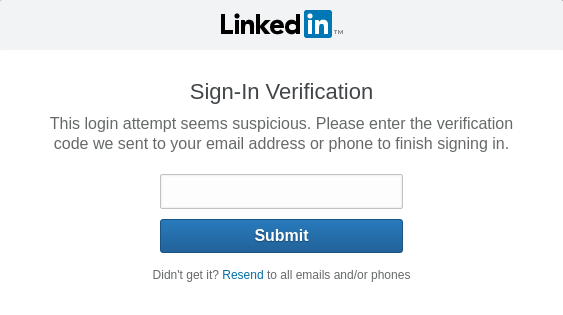

- What do the RBA dialogs look like?

Who Uses RBA?

We found evidence that Google, Facebook, LinkedIn, Amazon and GOG.com were using it.

Want to know how they are using it?

What Can Go Wrong?

Facebook’s verification code feature leaked the full phone number in our study. We consider this a bad practice and a threat for privacy. In so doing, phone numbers of users can be obtained. Also, attackers can call the number and gain access to the verification code by social engineering.

Thanks to the prompt reaction by Facebook, this vulnerability is now fixed:

- We contacted Facebook about the phone number leak on September 4th, 2018.

- Facebook resolved the issue on September 6th, 2018.

Technical Paper

More details can be found in our publication below. The paper is published at IFIP SEC 2019.

Is This Really You? An Empirical Study on Risk-Based Authentication Applied in the Wild

Stephan Wiefling, Luigi Lo Iacono, and Markus Dürmuth

Read the paper AbstractAbstract

Risk-based authentication (RBA) is an adaptive security measure to strengthen password-based authentication. RBA monitors additional implicit features during password entry such as device or geolocation information, and requests additional authentication factors if a certain risk level is detected. RBA is recommended by the NIST digital identity guidelines, is used by several large online services, and offers protection against security risks such as password database leaks, credential stuffing, insecure passwords and large-scale guessing attacks. Despite its relevance, the procedures used by RBA-instrumented online services are currently not disclosed. Consequently, there is little scientific research about RBA, slowing down progress and deeper understanding, making it harder for end users to understand the security provided by the services they use and trust, and hindering the widespread adoption of RBA.

In this paper, with a series of studies on eight popular online services, we (i) analyze which features and combinations/classifiers are used and are useful in practical instances, (ii) develop a framework and a methodology to measure RBA in the wild, and (iii) survey and discuss the differences in the user interface for RBA. Following this, our work provides a first deeper understanding of practical RBA deployments and helps fostering further research in this direction.

If you like to cite the paper, please use the following BibTeX entry:

@inproceedings{Wiefling_Is_2019,

author = {Wiefling, Stephan and Lo Iacono, Luigi and D\"{u}rmuth, Markus},

title = {Is {This} {Really} {You}? {An} {Empirical} {Study} on {Risk}-{Based} {Authentication} {Applied} in the {Wild}},

booktitle = {34th {IFIP} {TC}-11 {International} {Conference} on {Information} {Security} and {Privacy} {Protection} ({IFIP} {SEC} 2019)},

series = {{IFIP} {Advances} in {Information} and {Communication} {Technology}},

volume = {562},

pages = {134--148},

isbn = {978-3-030-22311-3},

doi = {10.1007/978-3-030-22312-0_10},

publisher = {Springer International Publishing},

location = {Lisbon, Portugal},

month = jun,

year = {2019}

}Black-Box Testing Tool

We also published more about our black-box testing tool at NordSec 2019.

Even Turing Should Sometimes Not Be Able To Tell: Mimicking Humanoid Usage Behavior for Exploratory Studies of Online Services

Stephan Wiefling, Nils Gruschka, and Luigi Lo Iacono

Read the paper AbstractAbstract

Online services such as social networks, online shops, and search engines deliver different content to users depending on their location, browsing history, or client device. Since these services have a major influence on opinion forming, understanding their behavior from a social science perspective is of greatest importance. In addition, technical aspects of services such as security or privacy are becoming more and more relevant for users, providers, and researchers. Due to the lack of essential data sets, automatic black box testing of online services is currently the only way for researchers to investigate these services in a methodical and reproducible manner. However, automatic black box testing of online services is difficult since many of them try to detect and block automated requests to prevent bots from accessing them.

In this paper, we introduce a testing tool that allows researchers to create and automatically run experiments for exploratory studies of online services. The testing tool performs programmed user interactions in such a manner that it can hardly be distinguished from a human user. To evaluate our tool, we conducted - among other things - a large-scale research study on Risk-based Authentication (RBA), which required human-like behavior from the client. We were able to circumvent the bot detection of the investigated online services with the experiments. As this demonstrates the potential of the presented testing tool, it remains to the responsibility of its users to balance the conflicting interests between researchers and service providers as well as to check whether their research programs remain undetected.

If you like to cite the paper, please use the following BibTeX entry:

@inproceedings{Wiefling_Even_2019,

title = {Even {Turing} {Should} {Sometimes} {Not} {Be} {Able} {To} {Tell}: {Mimicking} {Humanoid} {Usage} {Behavior} for {Exploratory} {Studies} of {Online} {Services}},

booktitle = {24th {Nordic} {Conference} on {Secure} {IT} {Systems} ({NordSec} 2019)},

series = { {Lecture} {Notes} in {Computer} {Science}},

author = {Wiefling, Stephan and Gruschka, Nils and Lo Iacono, Luigi},

volume = {11875},

pages = {188--203},

isbn = {978-3-030-35055-0},

doi = {10.1007/978-3-030-35055-0_12},

publisher = {Springer Nature},

location = {Aalborg, Denmark},

month = nov,

year = {2019}

}